Progressive Network Sparsification and Latent Feature Compression for Scalable Collaborative Learning.

A Unified TinyML System for Multi-modal Edge Intelligence and Real-time Visual Perception

A Unified TinyML System for Multi-modal Edge Intelligence and Real-time Visual PerceptionResearch Opportunity 4: Progressive Network Sparsification and Latent Feature Compression for Scalable Collaborative Learning

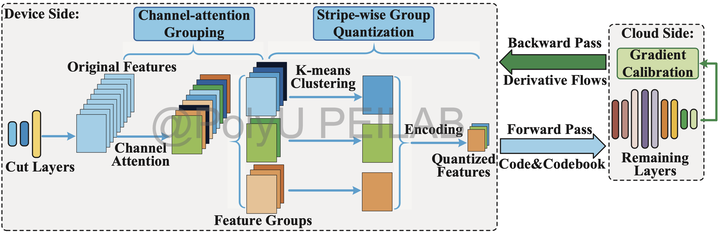

Illustration: In the edge intelligence environment, new data is continuously generated on user devices that cannot be aggregated at once due to privacy and energy concerns. These issues require us to develop new insights into traffic saving to build a communication-efficient collaborative learning paradigm. Unlike previous methods aiming at improving bandwidth utilization or using an unstructured pixel-wise compression, we jointly capture the channel and spatial-level feature redundancy, and conduct a hierarchical compression in these two levels to achieve a much higher traffic reduction ratio. Specifically, we need to design a more efficient feature compression method to leverage the pixel similarity, and reorganize the features into groups based on channel significance to prune the network. Meanwhile, we intend to calibrate the gradients of compressed features with a comprehensive theoretical analysis of the convergence rate. Such a co-design can provide a significant traffic reduction over existing methods while not sacrificing much model accuracy, achieving good training flexibility and communicational efficiency. We believe this work can contribute to the further development of edge intelligence applications.